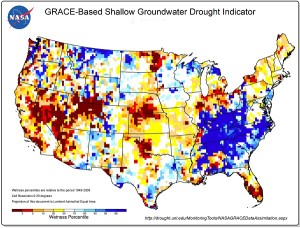

Drought has historically been the disaster that fails to focus our attention on its consequences until it is too late to take effective action. While other disasters like earthquakes, hurricanes, tornadoes, and most floods have a quick onset that signals trouble, and a clear end point that signals that it is time to recover and rebuild, drought has been that slow-onset event that sneaks up on whole regions and grinds on for months and years, leaving people exhausted, frustrated, and feeling powerless. Our species and life itself depend on water for survival.

Over time, our nation has responded to most types of disasters both with an overall framework for response, centered on the Federal Emergency Management Agency, and with resources for specific types of disasters with operations like the National Hurricane Center. It took longer for drought to win the attention of Congress, but in 2006, Congress passed the National Integrated Drought Information System (NIDIS) Act, creating an interagency entity with that name, led by the National Oceanic and Atmospheric Administration. NIDIS was reauthorized in 2014. Its headquarters are in Boulder, Colorado.

For the last five years, I have been involved in various ways with NIDIS and the National Drought Mitigation Center (NDMC), an academic institute affiliated with the University of Nebraska in Lincoln. To date, the major byproduct of that collaboration has been the publication by the American Planning Association (APA) of a Planning Advisory Service (PAS) Report, Planning and Drought, released in early 2014. Both NIDIS and NDMC have helped make that report widely available among professionals and public officials engaged in preparing communities for drought. The need to engage community planners in this enterprise has been clear. Much of the Midwest was affected by drought in 2012, at the very time we were researching the report. Texas suffered from prolonged drought within the last few years, and California has yet to fully recover from a multi-year drought that drained many of its reservoirs. And while drought may seem less dangerous than violent weather or seismic disturbances, the fact is that, in the last five years alone, four drought episodes each exceeding $1 billion in damages have collectively caused nearly $50 billion in adverse economic consequences. The need to craft effective water conservation measures and to account adequately for water consumption needs in reviewing proposed development has become obvious. We need to create communities that are more resilient in the face of drought conditions.

Part of the NIDIS EPC Working Group discusses ongoing and future efforts during the Lincoln meeting.

Over the past decade, NIDIS has elaborated its mission in a number of directions including this need to engage communities in preparing for drought. It was this mission that brought me to Lincoln at the end of April for the NIDIS Engaging Preparedness Communities (EPC) Working Group. This group works to bring together the advice and expertise of numerous organizations involved in drought, including not only APA, NOAA, and NDMC, but state agencies like the Colorado Water Conservation Board, tribal organizations such as the Indigenous Waters Network, and academic experts in fields like agriculture and climatology. Over ten years since the creation of NIDIS, this and other working groups have made considerable strides toward better understanding the impact of drought on communities and regions and increasing public access to information and predictions about drought in order to give them a better basis for decision making in confronting the problem. NIDIS has conducted a number of training webinars, established online portals for databases and case studies, and otherwise tried to demystify what causes drought and how states and communities can deal with it. Our job for two days in Lincoln was simply to push the ball farther uphill and to help coordinate outreach and resources, especially for communication, to make the whole program more effective over the next few years.

Much of that progress is captured in the NIDIS Progress Report, issued in January of this year. More importantly, this progress and the need to build further national capabilities to address drought resilience, captured the attention of the White House. On March 21, the White House issued a Presidential Memorandum signed by President Obama, which institutionalized the National Drought Resilience Partnership, which issued an accompanying Federal Action Plan for long-term drought resilience. This plan enhances the existing muscle of NIDIS by laying out a series of six national drought resilience goals: 1) data collection and integration; 2) communicating drought risk to critical infrastructure; 3) drought planning and capacity building; 4) coordination of federal drought activity; 5) market-based approaches for infrastructure and efficiency; and 6) innovative water use, efficiency, and technology.

Drought clearly is a complex topic in both scientific and community planning terms, one that requires the kind of coordination this plan describes in order to alleviate the economic burden on affected states and regions. With the growing impacts of climate change in coming decades, this issue can only become more challenging. We have a long way to go, and many small communities lack the analytical and technical capacities they will need. Federal and state disaster policy should be all about building capacity and channeling help where it is needed most. The institutional willingness of the federal government to at least acknowledge this need and organize to address it is certainly encouraging.

Jim Schwab